The Development Process

Developing a system with many moving parts can be difficult, frustrating and expensive. In addition to the complexity of the system itself, the limitations of available tools, and whatever baggage the various contributors bring to the development process, there is a basic built-in tension between the time, money, and functionality: you can have nothing for free now; more will take longer, depending on how much money you are willing to spend.

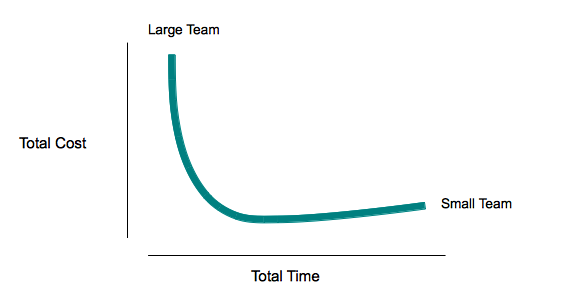

There is, of course, no such thing as a maximum cost or a maximum time as there are plenty of entities in this world which will be happy to drag out work indefinitely and take as much money as you have to offer. There is, however, an interplay between the minimum cost and a minimum development time, and it typically looks something like this:

Essentially, the type of system you want to build determines how much work there is as well as how many contributors can effectively work on different tasks concurrently. The idea, then, is to find the happy spot on that curve (towards the quick and inexpensive lower left) where you can build what you need in a reasonable amount of time without paying more than necessary.

As with most projects, the most important questions to address usually are:

1) what is being built? (this may include technological constraints, ie: how)

2) who is building which part?

3) how much will it costs to build and to own?

They may seem like straight forward questions, but there are whole worlds of subtleties and painful lessons hiding within each.

The nice thing about these three questions is that

- they cover the important parts

- they are universal - regardless of your development philosophy, you need to answer them

- they lend themselves to a simple problem solving approach: answer them in order until you have satisfactory answers to all three (Hint: You will probably have to backtrack a few times).

Complexity may require you to break down the system into smaller pieces. If you do, you need to follow a certain protocol in order to break down and merge back together your answers to the above questions. Also, there are many ways of partitioning a system into component parts. Done right, it is the way to make the most daunting constructions manageable. Done wrong, it can make a tangled mess out of what could have been a reasonably straight forward project.

While it's nice to know what you are building before you start, it takes work and technical know-how in order to prune the decision tree and flesh out the details, meaning a lot of work can go into deciding what the work entails. If you have an idea of useful subsets, you can develop a smaller solution earlier and for less, then extend that system with the benefit of early experience with a) what you have developed already and b) the development resources available to you.

In the extreme, you can go agile and split up development into many small pieces that can be specified, implemented, and tested in parallel, even giving you the freedom to add features as you go until you run out of requested features or time or money. This can be a useful and appropriate development methodology but comes with certain costs and requirements.

At every level, making the important decisions first and in the right order sets the foundation for everything that follows.

What is being built?

At some point, somebody has to translate the big idea into some sort of specification. The process of writing down details will force you to discover holes and contradictions in what you had envisioned, which is important in and of itself. The resulting specification is essential to what comes next:

- it lets you determine what resources you need (who and how much)

- it tells the developers what needs to be build (and how)

- it allows you to determine whether or not the developers are done (by comparing the system's behavior with the specified behavior)

You can come up with three different sets of specifications (which you will then have to keep in sync) or you can come up with a single specification that satisfies all three needs, in which case you may need some help with how best to format these specifications such that they are usable to both human and machine.

What level of detail you specify, depends largely on your relationship with your developers. But even if you trust your developers to read your mind and fill in the blanks perfectly, you still need to spell out what is important to you. Specifying too much wastes your time and may even restrict your developer from coming up with something even better. Specifying too little may cause you to then change the specifications after a developer has started their work, and will require them to throw away work they have already done.

The specifications need to be sensitive to what external (off the shelf) components you are incorporating into your project. For instance, if you know that you are basing your system on a certain version of a certain shopping cart framework, then you should definitely not be specifying every feature in that framework. Instead, your focus needs to be on how that component is to be customized, configured, and integrated.

During development, new questions will come as the implementation forces resolution of previously unnoticed contradictions in the specification. Responsible developers will also point out data life cycle issues not addressed by the specification. For instance, what is the life span of information such as customer purchases, browser sessions, or alerts, and who is responsible for disposing of them when and how? These questions need to be resolved in one of the following ways:

- they may require you to shift development to focus onto a different area while finding a solution

- they may be shelved to be dealt with later (in a list of known issues)

- additional specifications may need to be created or existing specifications may need to be changed

- they may require additional development, testing, or deployment infrastructure

The opposite can happen as well. There may be a possibility to use an existing service or tool instead of building it, if only the specifications could be relaxed or changed.

Regardless of what format and what level of detail, you should specify what you need to see in the end, not more, not less, and be ready to improve and maintain this information in response to the developers questions and suggestions. While you create and maintain the specifications, try to be honest with yourself as to what cans exactly you are kicking down the road.

Who is building which part?

The Team

System development requires a number of participants who bring the necessary expertise and skills to the project. They will require some tools and a certain amount of infrastructure and plumbing. They have to be able to trust each other to do their part. They have to be able and willing to work together toward a specific common goal.

At least one of them will need to know how all the moving parts fit together, even if he or she does not understand all of the inner workings of each component. This person is sometimes called the architect. Everybody else gets to focus on their corner of the project, according to their own strengths and abilities.

In a functional team, the contributors understand what is expected of them and their creations, value each others contributions, and use the available tools following established convention in order to make progress effectively. Experienced teams often start with a set of group standards based on current best industry practices. These become part of the documentation for the system being developed and form a starting point for anybody joining the team.

Some amount of adult supervision will be required within anything but the smallest development groups in order to ensure the contributors have what they need (including what they need from each other) in order to remain productive. Relationships between groups (e.g.: between vendors who may have conflicting priorities) also need to be managed. At both levels, clear expectations, explicit conventions, and appropriate incentives should be in place.

What about testing?

Not surprisingly, the people using a piece of software as well as the people who pay for its development (not always one and the same) care less about how its creators have delineated its innards and more about what it does. This is why, while some developers are working on building the system, a second group should work on something that will allow you test the system against the specification.

If this sounds overly cumbersome, consider this:

- you will need to test the system being build against every single part of the specification many times during development

- history has shown over and over, that any kind of software undergoing change, will break unless it is also being tested

- the minimum cost of the change in specification and/or development work is largely determined by the cost of testing, meaning that even the smallest change may become very expensive unless your testing is automated

- if you are willing to write down the specifications in a suitable format (or formats), then the specification itself becomes a large part of the automated test suite, created and maintained at little or no cost

- disagreements on how well the system works (between developers and those paying for development) are much easier to settle or avoided altogether if there is a readily available arbitrator in the form of an automated test suite

Note that sometimes the specifications are written down using detailed examples of the expected behavior (user stories), but short of being machine readable, leaving it up to the developer to translate the specification. This works as long as there is a mechanism to efficiently enforce that these tests are being properly created and kept in sync with the corresponding specifications.

There are other types of testing as well, mainly the kind developers need to create so they can make their part of the system work. Even though they do not belong into a deployed production environment, these are part of the system being developed, and should be kept maintained in working order

Costs

Developing a software system can be expensive. The people putting together the pieces will want to be paid for their time. The challenge is usually how to motivate developers to timely build the cheapest working system. Future costs of development and maintenance should also be considered as there is often a fluid boundary between what is done now versus what will be done later.

Deployment and maintenance issues are often neglected until the end. The time to deal with them is actually during early development, as the decisions and assumptions made at that time determine largely what those deployment and maintenance tasks are, and if somebody is creating liabilities downstream, they need to account for those somehow. This situation becomes more complicated for fixed bid scenarios where developers have to make a choice as to whether and how to absorb the costs rising from those late complications or to pass them (externalize them) on to somebody else.

Development infrastructure also costs money: proprietary information (such as source code itself, knowledge repositories, standards, FAQs, and specifications) need to be stored in ways that let it's owners both control access and track changes. Again, the challenge is to provide the development team the tools they need without paying for more than that. There have been great improvements in the availability of inexpensive cloud based services such as Github and Pivotal Tracker, allowing teams of all sizes to get started quickly and at very little expense.

Testing, whether cloud based or local, on-demand or continuous, may require additional resources in terms of configuration, software, or computing power.

Even cloud based operation will require some level of monitoring, ongoing maintenance, contingency planning, and disaster preparedness.

Metrics

In order to make intelligent decisions along the way, you need to know exactly where you are now versus where you want to be.

The initial phase of a project is somewhat nebulous, because you are just starting to figure out and write down what exactly the goal is. Progress here is harder to track. One way to get a handle on how complete your specifications are is basically to write down everything you need to specify without worrying about testable scenarios. Once you have done that, the task of translating these more informal requirements into testable scenarios becomes quantifiable, and you know you are done with your specifications when the scenarios describe what you need and nothing else, while also giving you some measure of observability of how much of the system is operational.

During development, there are two primary independent sources of information:

- the tests against your specifications

- statistics derived from the source code

Between these two sets of statistics, you can get a fairly good idea of how quickly your development team is progressing. In order for this to work, however, you need to structure your specifications such that as many tests as possible can be made to pass before the system is complete.

If your user scenarios can be arranged into stages of ever increasing system complexity, with specifications and corresponding tests to confirm that the system works thus far, then these tests become the milestones along the path of progress. You may also have to break up your specifications to describe only certain aspects in certain scenarios. This, incidentally, is the only kind of change in specification which does not create extra work for the development team (assuming you resist the temptation to sneak in extra functionality in the manner).

A well designed test suite will thus provide milestones at regular intervals, while a poorly designed test suite will leave large stretches of intense development effort without visible progress. To some degree, this requires the author of the specifications to anticipate where the under-the-hood system complexities will be. Experience helps here, but you will never get this absolutely right. Neither do you have to: if the development team agreed that your test plan provides an appropriate set of milestones, then a lack of visible progress indicates that the team has encountered unforeseen complications, which is exactly the kind of thing you want to measure.

Metrics can be tied to incentives, but this has to be part of a useful incentive structure. Paying your development team for making your system work according to specifications, is a useful incentive. Paying your developers for making changes to your code base or writing more new lines of code is not since, in and of itself, this does not get you any closer to a working system.

Those later metrics (e.g.: lines of code, number of changes made, compliance statistics with respect to established coding guidelines, and test coverage) are useful in enforcing compliance to the groups code of conduct or in due diligence situations.

Conclusion

Software system development can be fun, but it takes a certain amount of structure and forethought in order to keep development teams on the path towards success.

Successful development efforts need the right people, the right tools, and a environment that encourages the contributors to make progress towards the stated goal without creating liabilities for what comes next.